Students who are interested in research – especially junior- and senior-year students preparing for independent work – are often encouraged to master the use of a fully-featured statistical software like Stata or R in order to help with their statistical analysis. For example, in the Economics program at Princeton, Stata is often the software of choice for classes like ECO 202 (Statistics and Data Analysis for Economics) or ECO 302/312 (Econometrics). Similarly, other departments (for example, for the Undergraduate Certificate Program in Statistics and Machine Learning) offer SML 201 (Introduction to Data Science) or ORF 245 (Fundamentals of Engineering Statistics) to prepare students in the use of R. Usually, students end up developing a preference for one or the other even if they eventually grow proficient in both. While our coursework (rightly!) emphasizes the statistical methods, we, as students, are often left to navigate the intricacies of the statistical tools on our own. This post is a primer of some of the core packages in R that are used for advanced statistical analysis. As you begin to search for tools in R that can help you with your analysis, I hope you will find this information useful.

You might wonder what is so different between R and Stata. While the core functionalities are the same, the difference between the two software lies in the way you interact with them and in the way they approach their tasks. As you might have guessed from the title of the post, I prefer R over Stata: I find it logically easier to understand as it is syntactically quite similar to other coding languages like Java. I also find it easier to produce aesthetically pleasing graphs or documents in R as opposed to creating them in Stata.

However, in the more advanced statistics and econometrics classes, I learned how to conduct complex regressions in Stata. This left me in somewhat of an awkward position: while the coursework demanded that I use Stata for analysis, in the back of my mind, I wished I could use the coding capabilities of R instead! I can imagine that there will be quite a few other R aficionados like me: students who work with and analyze vast amounts of data in their research and who like the power and flexibility of R.

Thanks to my internship this summer (which, to my delight, I have now been given an opportunity to continue during Fall with Dr. Kalhor at C-PREE), I got the opportunity to use many of the econometric methods in R I learned in courses like ECO 312 by using a variety of packages. What follows next is a discussion of some of the packages that I found to be the most helpful in my research. While by no means exhaustive, this list should provide you with a respectable starting point for carrying out advanced statistical analysis in R (and, given my background, with probably a bias towards econometric analysis).

- Packages for analyzing panel data: If you want to analyze panel data – i.e., data with multiple observations over time for a set of units, e.g., the macroeconomic data like GDP, debt-to-GDP ratio, interest rates, etc. of a country across many years) – the best method is to use the plm package. Once you have loaded the plm package, you can estimate a fixed effects model using the plm function. In order to do this, it is necessary to first convert the data into long-form using the -gather- command from the tidyverse package. Once this is done, you can estimate the fixed effects model by calling the plm function with four arguments: function, which is the model that you are estimating written in the same manner that you would code it if using the traditional lm function; data, which is your data frame in long form; index, which should be entered as a vector with the column names for both your observations and your time variable; and finally, model which should be set to “within”. It should be noted that the plm function will use the entity demeaning method and thus will not report the fixed effects coefficients.

- Packages for running two-stage regressions: In order to run a two-stage regression with instrumental variables (which becomes useful when the error terms of the dependent variable are correlated with some of the independent variables in the ordinary least squares – or OLS – regression), the easiest way is to use the ivreg package. While it is not too difficult to conduct the two-stage regression by hand, using the ivreg function makes it a breeze. In this case, the syntax is very simple – it is the same as the syntax for the lm function, but with a small change: when you are entering the formula for the regression, you should first enter your normal variables, type “|”, and then enter your instrumental variables.

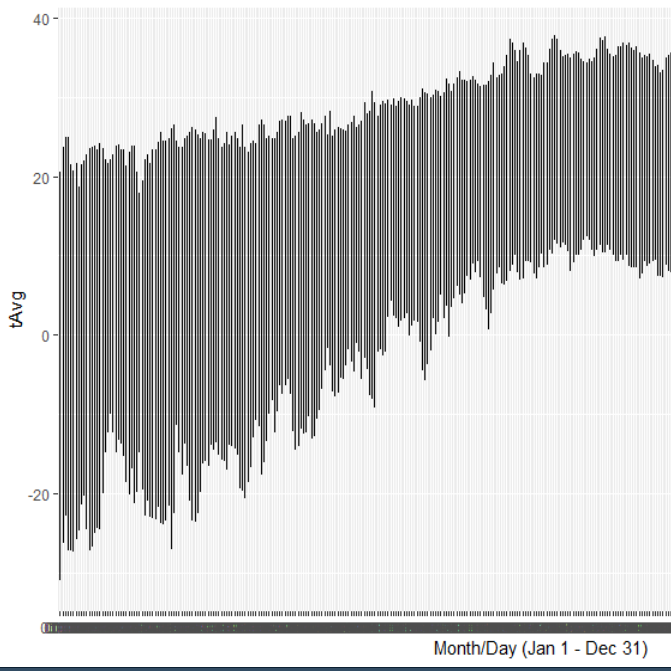

- Packages for time series analysis: For analyzing time series data – i.e., where the data has been collected over a period of time, e.g., the hourly temperature and precipitation at a weather station – there are three useful packages: tseries, urca, and vars. The tseries package is the backbone for time series analysis in R. For example, you can use the tseries package to run tests for stationarity (i.e., the statistical properties of the time series does not change over time). In order to perform an Augmented Dickey-Fuller test, you can use the adf.test function with two arguments: x, your time series (which can be encoded as either a vector or as a time series object using the ts command); and k, the number of lags. You can also use this package to run other similar tests if you so prefer, e.g., the Kwiatkowski–Phillips–Schmidt–Shin (or, more commonly, the KPSS) test. The urca package can be used for cointegration tests like the Johansen test that is run using the ca.jo function. Finally, the vars package, as the name suggests, will allow you to estimate a vector autoregression model. In order to do this, you would call the cbind function on a group of time series in order to group them into a single object and then use the VAR function on this object with an appropriate lag term. The package also includes multiple ways to interpret the VAR model such as using the irf function to graph the impulse response functions.

Of course, R is too vast to be covered in a single post, but these packages should be a useful starting point. More importantly, once you get used to these packages, you will become much more adept in searching for more specialized tools that you may need for your own research. In the end, if you ever need to get a more technical understanding of the functions, you can always read the R documentation for each of the packages and their respective functions.

I hope that those of you who, like me, prefer R to Stata find this guide helpful as you continue to analyze increasingly complex models in your own research!

– Abhimanyu Banerjee, Social Sciences Correspondent