I enrolled in GEO/WRI 201, Measuring Climate Change: Methods in Data Analysis & Scientific Writing, last Fall to challenge myself and learn how to integrate field work, scientific analysis, and writing. Although I already had cursory experience in these areas thanks to a Freshman Seminar on Biogeochemistry in the Everglades and a summer of assisting ecological fieldwork in Mozambique, I had never created and executed my own field project from start to finish. In my naiveté, I presumed that although the course would be difficult, I would conduct research that had a clear and established “finish-line”—and that I would reach it.

My project centered around quantifying changes in vegetation cover in Utah over the past twenty years using satellite imagery. The first couple months of the class were frustrating and I floundered across the deadlines. Most of the science courses I had taken offered a framework: a set of given questions with specific, correct answers. In real research, I found, you must create the questions—and they don’t necessarily have “correct” answers.

Successful research is based on convincing motive that builds off of key literature to contextualize and explain the broader importance of a specific research question.

Despite my struggles, the project seemed to be coming along. Using images from multiple Landsat satellites, I created a beautiful figure showing a steep decline in vegetation tightly correlated with rising temperatures and decreasing rainfall. Genuine results linking changing climate to remotely sensed vegetation! I was thrilled.

However, after weeks of writing and data analysis, I discovered that the seemingly important trends I was writing about were–to put it bluntly–garbage. In a peer review session, another student in the class hypothesized that the apparent decline in vegetation might be simply an artifact of comparing imagery from different Landsat satellites. I scoffed–but I also started to worry.

Just to be sure, a few days later I tested her hypothesis… and discovered that it perfectly explained away the ‘interesting’ trends forming the backbone of my paper. Newer satellites produced lower vegetation indices regardless of changes to the ground-cover. I was heartbroken. And so, with one week left before the due date, I had to reinvent my approach entirely, searching for new motive and purpose.

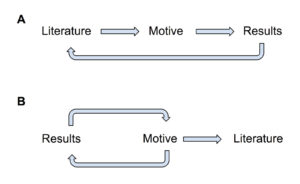

However, I was not starting from square one–I had learned a valuable lesson. I realized that at the outset of the class, I had prioritized data analysis, and motivating my research took a backseat. This decision pushed me into a trap of taking my results, and trying to reverse-engineer motive for my project—like making up questions based on an answer. As a result, I had little background in the literature concerning my work. In contrast to my approach, successful research is based on convincing motive that builds off of key literature to contextualize and explain the broader importance of a specific research question.

And so in the eleventh hour, I delved into the literature concerning Landsat calibration—something I should have done months before—and found that the issue that derailed my paper affected many researchers studying Landsat imagery. As a result, I decided to reorient my paper to address that problem by showing the variation between Landsat products, and offering a practical method for calibrating the imagery. By using my failure to motivate new research I created a paper that featured real motive and important results.

Through my successes and failures, I gained a deeper appreciation and understanding of scientific writing and research. There are no ‘finish-lines,’ and there is no specific route to take. A researcher cannot just pick a path and walk straight; research demands flexibility to change directions, awareness to recognize interesting and important paths, diligence to realize errors and dead ends, and fortitude to overcome failures.

— Alec Getraer, Natural Sciences Correspondent